Mpi.h Dev C%2b%2b

Home › Forums › Intel® Software Development Products › Clusters and HPC Technology cannot open source file 'mpi.h' cannot open source file 'mpi.h'. Only in C bindings: - MPIStatusc2f08 - MPIStatusf082c In all bindings but mpif.h - MPIStatusf082f - MPIStatusf2f08 and the PMPI. related subroutines As initially inteded by the MPI forum, the Fortran to/from Fortran 2008 conversion subtoutines are.not. implemented in the mpif.h bindings.

This is a short introduction to the Message Passing Interface (MPI)designed to convey the fundamental operation and use of the interface.This introduction is designed for readers with some background programmingC, and should deliver enough information to allow readers towrite and run their own (very simple) parallel C programs using MPI.

There exists a version of this tutorial for Fortran programers calledIntroduction the the Message Passing Interface (MPI) using Fortran.

What is MPI?

MPI is a library of routines that can be used to create parallel programsin C or Fortran77. Standard C and Fortran include no constructs supporting parallelism so vendors have developed a variety of extensionsto allow users of those languages to build parallel applications.The result has been a spate of non-portable applications, and a needto retrain programmers for each platform upon which they work.The MPI standard was developed to ameliorate these problems. It is alibrary that runs with standard C or Fortran programs, using commonly-available operating system services to create parallel processes and exchange information among these processes.

MPI is designed to allow users to create programs that can run efficientlyon most parallel architectures. The design process includedvendors (such as IBM, Intel, TMC, Cray, Convex, etc.), parallellibrary authors (involved in the development of PVM, Linda, etc.),and applications specialists. The final version for the draftstandard became available in May of 1994.

MPI can also support distributed program execution on heterogenoushardware. That is, you may run a program that starts processes onmultiple computer systems to work on the same problem. This is usefulwith a workstation farm.

Hello world

Here is the basic Hello world program in C using MPI:If you compile hello.c with a command like

you will create an executable file called hello, which you canexecute by using the mpirun command as in the following sessionsegment:

When the program starts, it consists of only one process, sometimes calledthe 'parent', 'root', or 'master' process. When the routine MPI_Init executes within the root process, it causes the creation of 3 additional processes (to reach the number of processes (np) specified on the mpirun command line), sometimes called 'child' processes.

Each of the processes then continues executing separate versions of the hello world program. The next statement in every program isthe printf statement, and each process prints 'Hello world' as directed.Since terminal output from every program will be directed to thesame terminal, we see four lines saying 'Hello world'.

Identifying the separate processes

As written, we cannot tell which 'Hello world' line was printed bywhich process. To identify a process we need some sort of process ID and aroutine that lets a process find its own process ID.MPI assigns an integer to each process beginning with 0 for the parentprocess and incrementing each time a new process is created.A process ID is also called its 'rank'.MPI also provides routines that let the process determine its process ID,as well as the number of processes that are have been created.

Here is an enhanced version of the Hello world program thatidentifies the process that writes each line of output:

When we run this program, each process identifies itself:

Mpi.h Dev C 2b 2b Test

Note that the process numbers are not printed in ascending order.That is because the processes execute independently and executionorder was not controlled in any way. The programs may print their results in different orders each time they are run.

(To find out which Origin processors and memories areused to run a program you can turn on the MPI_DSM_VERBOSE environment variablewith 'export MPI_DSM_VERBOSE=ON', or equivalent.)

To let each process perform a different task, you can use a programstructure like:

Basic MPI communication routines

It is important to realize that separate processes share nomemory variables. They appear to be using the same variables, butthey are really using COPIES of any variable defined in the program.As a result, these programs cannot communicate with each other byexchanging information in memory variables. Instead they may use anyof a large number of MPI communication routines. The two basicroutines are:

- MPI_Send, to send a message to another process, and

- MPI_Recv, to receive a message from another process.

The syntax of MPI_Send is:

- data_to_send: variable of a C type that corresponds to the send_type supplied below

- send_count: number of data elements to be sent (nonnegative int)

- send_type: datatype of the data to be sent (one of the MPI datatype handles)

- destination_ID: process ID of destination (int)

- tag: message tag (int)

- comm: communicator (handle)

The syntax of MPI_Recv is:

- received_data: variable of a C type that corresponds to the receive_type supplied below

- receive_count: number of data elements expected (int)

- receive_type: datatype of the data to be received (one of the MPI datatype handles)

- sender_ID: process ID of the sending process (int)

- tag: message tag (int)

- comm: communicator (handle)

- status: status struct (MPI_Status)

The amount of information actually received can then be retrieved from the status variable, as with:

MPI_Recv blocks until the data transfer is complete and thereceived_data variable is available for use.

The basic datatypes recognized by MPI are:

| MPI datatype handle | C datatype |

|---|---|

| MPI_INT | int |

| MPI_SHORT | short |

| MPI_LONG | long |

| MPI_FLOAT | float |

| MPI_DOUBLE | double |

| MPI_CHAR | char |

| MPI_BYTE | unsigned char |

| MPI_PACKED |

There also exist other types like: MPI_UNSIGNED, MPI_UNSIGNED_LONG, and MPI_LONG_DOUBLE.

A common pattern of process interaction

A common pattern of interaction among parallel processes is for one,the master, to allocate work to a set of slave processes and collect results from the slaves to synthesize a final result.

The master process will execute program statements like:

In this fragment, the master program sends a contiguous portion ofarray1 to each slave using MPI_Send and then receives a responsefrom each slave via MPI_Recv. In practice, the master does not have tosend an array; it could send a scalar or some other MPI data type, and it could construct array1 from any components to which it has access.

Here the returned information is put in array2, which will bewritten over every time a different message is received. Therefore,it will probably be copied to some other variable within the receiving loop.

Note the use of the MPI constant MPI_ANY_SOURCE to allow this MPI_Recvcall to receive messages from any process. In some cases, a programwould need to determine exactly which process sent a message receivedusing MPI_ANY_SOURCE. status.MPI_SOURCE will hold that information,immediately following the call to MPI_Recv.

The slave program to work with this master would resemble:

There could be many slave programs running at the same time.Each one would receive data in array2 from the master via MPI_Recv and work on its own copy of that data. Each slave would construct its own copy of array3, which it would then send to the master using MPI_Send.

A non-parallel program that sums the values in an array

The following program calculates the sum of the elements of aarray. It will be followed by a parallel version of the same program usingMPI calls.Design for a parallel program to sum an array

The code below shows a common Fortran structure for including bothmaster and slave segments in the parallel version of the example program just presented. It is composed of ashort set-up section followed by a single if.elseloop where the master process executes the statments between thebrackets after the if statement, and the slave processesexecute the statements between the brackets after theelse statement.The complete parallel program to sum a array

Here is the expanded parallel version of the same program usingMPI calls.The following table shows the values of several variables during theexecution of sumarray_mpi. The information comes from a two-processorparallel run, and the values of program variables are shown in bothprocessor memory spaces. Note that there is only one process activeprior to the call to MPI_Init.

| Program location | Before MPI_Init | After MPI_Init | Before MPI_Send to slave | After MPI_Recv by slave | After MPI_Recv by master | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Variable Name | Proc 0 | Proc 0 | Proc 0 | Proc 0 | root_process | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| my_id | . | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | ||||||

| num_procs | . | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | ||||||

| num_rows | . | . | . | 6 | . | 6 | . | 6 | . | ||||||

| avg_rows_ per_process | . | . | . | 3 | . | 3 | . | 3 | . | ||||||

| num_rows_ received | . | . | . | . | . | . | 3 | . | 3 | ||||||

| array[0] | . | . | . | 1.0 | . | 1.0 | . | 1.0 | . | ||||||

| array[1] | . | . | . | 2.0 | . | 2.0 | . | 2.0 | . | ||||||

| array[2] | . | . | . | 3.0 | . | 3.0 | . | 3.0 | . | ||||||

| array[3] | . | . | . | 4.0 | . | 4.0 | . | 4.0 | . | ||||||

| array[4] | . | . | . | 5.0 | . | 5.0 | . | 5.0 | . | ||||||

| array[5] | . | . | . | 6.0 | . | 6.0 | . | 6.0 | . | ||||||

| array2[0] | . | . | . | . | . | . | 4.0 | . | 4.0 | ||||||

| array2[1] | . | . | . | . | . | . | 5.0 | . | 5.0 | ||||||

| array2[2] | . | . | . | . | . | . | 6.0 | . | 6.0 | ||||||

| array2[3] | . | . | . | . | . | . | . | . | . | ||||||

| array2[4] | . | . | . | . | . | . | . | . | . | ||||||

| array2[5] | . | . | . | . | . | . | . | . | . | ||||||

| partial_sum | . | . | . | . | . | . | . | 6.0 | 15.0 | ||||||

| sum | . | . | . | . | . | . | . | 21.0 | . | ||||||

Logging and tracing MPI activity

It is possible to use mpirun to record MPI activity, by using the options -mpilog and -mpitrace.For more information about this facility see man mpirun.

-mpitrace.For more information about this facility see man mpirun.Collective operations

MPI_Send and MPI_Recv are 'point-to-point' communications functions.That is, they involve one sender and one receiver. MPI includes a large number of subroutines for performing 'collective' operations. Collective operation are performed by MPI routines that are called by each member of a group of processes that want some operation to be performed for them as a group. A collective function may specify one-to-many,many-to-one, or many-to-many message transmission.MPI supports three classes of collective operations:

- synchronization,

- data movement, and

- collective computation

Synchronization

The MPI_Barrier function can be used to synchronize a group of processes.To synchronize a group of processes, each one must call MPI_Barrierwhen it has reached a point where it can go no further until itknows that all its cohorts have reached the same point. Once a process has called MPI_Barrier, it will be blocked until all processes in the group have also called MPI_Barrier.Collective data movement

There are several routines for performing collective datadistribution tasks:- MPI_Bcast

- Broadcast data to other processes

- MPI_Gather, MPI_Gatherv

- Gather data from participating processes into a single structure

- MPI_Scatter, MPI_Scatter

- Break a structure into portions and distribute those portions to other processes

- MPI_Allgather, MPI_Allgatherv

- Gather data from different processes into a single structure that is then sent to all participants (Gather-to-all)

- MPI_Alltoall, MPI_Alltoallv

- Gather data and then scatter it to all participants (All-to-all scatter/gather)

The routines with 'V' suffixes move variable-sized blocks of data.

The subroutine MPI_Bcast sends a message from one process to allprocesses in a communicator.

When processes are ready to share information with other processes as part of a broadcast, ALL of them must execute a call to MPI_BCAST. There is no separate MPI call to receive a broadcast.

MPI_Bcast could have been used in the program sumarray_mpi presentedearlier, in place of the MPI_Send loop that distributed data toeach process. Doing so would have resulted in excessive data movement,of course. A better solution would be MPI_Scatter or MPI_Scatterv.

The subroutines MPI_Scatter and MPI_Scatterv take an input array, break the input data into separate portions and send a portion to each one of the processes in a communicating group.

- data_to_send: variable of a C type that corresponds to the MPI send_type supplied below

- send_count: number of data elements to send (int)

- send_count_array: array with an entry for each participating process containing the number of data elements to send to that process (int)

- send_start_array: array with an entry for each participating process containing the displacement relative to the start of data_to_send for each data segment to send (int)

- send_type: datatype of elements to send (one of the MPI datatype handles)

- receive_data: variable of a C type that corresponds to the MPI receive_type supplied below

- receive_count: number of data elements to receive (int)

- receive_type: datatype of elements to receive (one of the MPI datatype handles)

- sender_ID: process ID of the sender (int)

- receive_tag: receive tag (int)

- comm: communicator (handle)

- status: status object (MPI_Status)

The routine MPI_Scatterv could have been used in the program sumarray_mpi presented earlier, in place of the MPI_Send loop that distributed data to each process.

Mpi.h Dev C 2b 2b +

MPI_Bcast, MPI_Scatter, and other collective routines build a communication tree among the participating processes to minimize message traffic. If there are N processes involved, there would normally be N-1 transmissions during a broadcast operation, but if a tree is built so that the broadcasting process sends the broadcast to 2 processes, and they each send it on to 2 other processes, thetotal number of messages transferred is only O(ln N).

Collective computation routines

Collective computation is similar to collective data movement with theadditional feature that data may be modified as it is moved.The following routines can be used for collective computation.- MPI_Reduce

- Perform a reduction operation. That is, apply some operation to some operand in every participating process. For example, add an integer residing in every process together and put the result in a process specified in the MPI_Reduce argument list.

- MPI_Allreduce

- Perform a reduction leaving the result in all participating processes

- MPI_Reduce_scatter

- Perform a reduction and then scatter the result

- MPI_Scan

- Perform a reduction leaving partial results (computed up to the point of a process's involvement in the reduction tree traversal) in each participating process. (parallel prefix)

When processes are ready to share information with other processes as part of a data reduction, all of the participating processes execute a call to MPI_Reduce, which uses local data to calculate each process'sportion of the reduction operation and communicates the local result toother processes as necessary. Only the target_process_ID receives the final result.

MPI_Reduce could have been used in the program sumarray_mpi presentedearlier, in place of the MPI_Recv loop that collected partial sums from each process.

Collective computation built-in operations

Many of the MPI collective computation routines take both built-inand user-defined combination functions. The built-in functions are:| Operation handle | Operation |

|---|---|

| MPI_MAX | Maximum |

| MPI_MIN | Minimum |

| MPI_PROD | Product |

| MPI_SUM | Sum |

| MPI_LAND | Logical AND |

| MPI_LOR | Logical OR |

| MPI_LXOR | Logical Exclusive OR |

| MPI_BAND | Bitwise AND |

| MPI_BOR | Bitwise OR |

| MPI_BXOR | Bitwise Exclusive OR |

| MPI_MAXLOC | Maximum value and location |

| MPI_MINLOC | Minimum value and location |

A collective operation example

The following program integrates the function sin(X) over therange 0 to 2 pi. It will be followed by a parallel version ofthe same program that uses the MPI library.The next program is an MPI version of the program above. It usesMPI_Bcast to send information to each participating process andMPI_Reduce to get a grand total of the areas computed by eachparticipating process.

Simultaneous send and receive

The subroutine MPI_Sendrecv exchanges messages with another process. A send-receive operation is useful for avoiding some kinds of unsafeinteraction patterns and for implementing remote procedure calls.

A message sent by a send-receive operation can be received by MPI_Recvand a send-receive operation can receive a message sent by an MPI_Send.

- data_to_send: variable of a C type that corresponds to the MPI send_type supplied below

- send_count: number of data elements to send (int)

- send_type: datatype of elements to send (one of the MPI datatype handles)

- destination_ID: process ID of the destination (int)

- send_tag: send tag (int)

- received_data: variable of a C type that corresponds to the MPI receive_type supplied below

- receive_count: number of data elements to receive (int)

- receive_type: datatype of elements to receive (one of the MPI datatype handles)

- sender_ID: process ID of the sender (int)

- receive_tag: receive tag (int)

- comm: communicator (handle)

- status: status object (MPI_Status)

MPI tags

MPI_Send and MPI_Recv, as well as other MPI routines, allow the userto specify a tag value with each transmission. These tag values may be used to specify the message type, or 'context,' in a situation where a program may receive messages of several types during the same program.The receiver simply checks the tag value to decide what kind ofmessage it has received.MPI communicators

Every MPI communication operation involves a 'communicator.'Communicators identify the group of processes involved in a communication operation and/or the context in which it occurs.The source and destination processes specified in point-to-point routines like MPI_Send and MPI_Recv must be members of the specifiedcommunicator and the two calls must reference the same communicator.Collective operations include just those processes identified bythe communicator specified in the calls.

viper software download for mac The communicator MPI_COMM_WORLD is defined by default for allMPI runs, and includes all processes defined by MPI_Init duringthat run. Additional communicators can be defined that include all or part of those processes. For example, suppose a group of processes needs to engage in two different reductions involving disjoint sets of processes. A communicator can be defined for each subset of MPI_COMM_WORLD and specified in the two reduction calls to manage message transmission.

MPI_Comm_split can be used to create a new communicator composed ofa subset of another communicator. MPI_Comm_dup can be used to create a new communicator composed of all of the members of another communicator. This may be useful for managing interactions within a set of processes in place of message tags. Download xexmenu 1.1 no jtag.

More information

This short introduction omits many MPI topics and routines, andcovers most topics only lightly. In particular, it omits discussions oftopologies, unsafe communication and non-blocking communication.For additional information concerning these and other topics please consult:

- the major MPI Web site, where you will find versions of the standards:http://www.mcs.anl.gov/mpi

- the books:

- Gropp, Lusk, and Skjellum, Using MPI: Portable Parallel Programming with the Message Passing Interface, MIT Press, 1994

- Foster, Ian, Designing and Building Parallel Programs, available in both hardcopy (Addison-Wesley Publishing Co., 1994) and on-line versions,

- Pacheco, Peter, A User's Guide to MPI, which gives a tutorial introduction extended to cover derived types, communicators and topologies, or

- the newsgroup comp.parallel.mpi

Exercises

Here are some exercises for continuing your investigation of MPI:- Convert the hello world program to print its messages in rank order.

- Convert the example program sumarray_mpi to use MPI_Scatter and/or MPI_Reduce.

- Write a program to find all positive primes up to some maximum value, using MPI_Recv to receive requests for integers to test. The master will loop from 2 to the maximum value on

- issue MPI_Recv and wait for a message from any slave (MPI_ANY_SOURCE),

- if the message is zero, the process is just starting,

if the message is negative, it is a non-prime,

if the message is positive, it is a prime. - use MPI_Send to send a number to test.

- Write a program to send a token from processor to processor in a loop.

Document prepared by:

Daniel Thomasset and

Michael Grobe

Academic Computing Services

The University of Kansas

with assistance and overheads provided by

The National Computational Science Alliance (NCSA) at

The University of Illinois

Parallel programs enable users to fully utilize the multi-nodestructure of supercomputing clusters. Message Passing Interface (MPI)is a standard used to allow several different processors on a clusterto communicate with each other. In this tutorial we will be using theIntel C++ Compiler, GCC, IntelMPI, and OpenMPI to create amultiprocessor ‘hello world’ program in C++. This tutorial assumesthe user has experience in both the Linux terminal and C++.

Resources:

Setup and “Hello, World”¶

Begin by logging into the cluster and using ssh to log in to a compilenode. This can be done with the command:

Next we must load MPI into our environment. Begin by loading in yourchoice of C++ compiler and its corresponding MPI library. Use thefollowing commands if using the GNU C++ compiler:

GNU C++ Compiler

Or, use the following commands if you prefer to use the Intel C++compiler:

Intel C++ Compiler

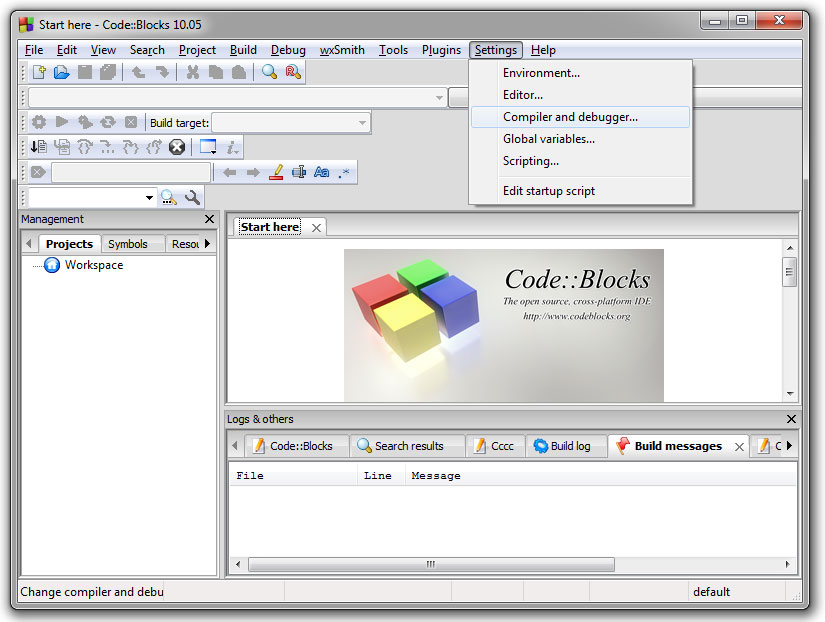

Mpi.h Dev C 2b 2b 2c

This should prepare your environment with all the necessary tools tocompile and run your MPI code. Let’s now begin to construct our C++file. In this tutorial, we will name our code file:hello_world_mpi.cpp

Open hello_world_mpi.cpp and begin by including the C standardlibrary <stdio.h> and the MPI library <mpi.h> , and byconstructing the main function of the C++ code:

Now let’s set up several MPI directives to parallelize our code. Inthis ‘Hello World’ tutorial we’ll be utilizing the following fourdirectives:

MPI_Init():

MPI_Comm_size():

MPI_Comm_rank():

MPI_Finalize():

These four directives should be enough to get our parallel ‘helloworld’ running. We will begin by creating twovariables,process_Rank, and size_Of_Cluster, to store an identifier for eachof the parallel processes and the number of processes running in thecluster respectively. We will also implement the MPI_Init functionwhich will initialize the mpi communicator:

Let’s now obtain some information about our cluster of processors andprint the information out for the user. We will use the functionsMPI_Comm_size() and MPI_Comm_rank() to obtain the count ofprocesses and the rank of a process respectively:

Lastly let’s close the environment using MPI_Finalize():

Now the code is complete and ready to be compiled. Because this is anMPI program, we have to use a specialized compiler. Be sure to use thecorrect command based off of what compiler you have loaded.

OpenMPI

Intel MPI

This will produce an executable we can pass to Summit as a job. Inorder to execute MPI compiled code, a special command must be used:

The flag -np specifies the number of processor that are to be utilizedin execution of the program.

In your job script, load the same compiler and OpenMPIchoices you used above to compile the program, and run the job withslurm to execute the application. Your job script should looksomething like this:

OpenMPI

Intel MPI

It is important to note that on Summit, there is a total of 24 coresper node. For applications that require more than 24 processes, youwill need to request multiple nodes in your job. Ouroutput file should look something like this:

Ref: http://www.dartmouth.edu/~rc/classes/intro_mpi/hello_world_ex.html

MPI Barriers and Synchronization¶

Like many other parallel programming utilities, synchronization is anessential tool in thread safety and ensuring certain sections of codeare handled at certain points. MPI_Barrier is a process lock thatholds each process at a certain line of code until all processes havereached that line in code. MPI_Barrier can be called as such:

To get a handle on barriers, let’s modify our “Hello World” program sothat it prints out each process in order of thread id. Starting withour “Hello World” code from the previous section, begin by nesting ourprint statement in a loop:

Next, let’s implement a conditional statement in the loop to printonly when the loop iteration matches the process rank.

Lastly, implement the barrier function in the loop. This will ensurethat all processes are synchronized when passing through the loop.

Compiling and running this code will result in this output:

Message Passing¶

Message passing is the primary utility in the MPI applicationinterface that allows for processes to communicate with each other. Inthis tutorial, we will learn the basics of message passing between 2processes.

Message passing in MPI is handled by the corresponding functions andtheir arguments:

Mpi.h Dev C 2b 2b 1b

The arguments are as follows:

MPI_Send

MPI_Recv

Let’s implement message passing in an example:

Example¶

We will create a two-process process that will pass the number 42 fromone process to another. We will use our “Hello World” program as astarting point for this program. Let’s begin by creating a variable tostore some information.

Now create if and elseif conditionals that specify appropriateprocess to call MPI_Send() and MPI_Recv() functions. In thisexample we want process 1 to send out a message containing the integer42 to process 2.

Lastly we must call MPI_Send() and MPI_Recv(). We will pass the following parameters into thefunctions:

Lets implement these functions in our code:

Compiling and running our code with 2 processes will result in thefollowing output:

Group Operators: Scatter and Gather¶

Group operators are very useful for MPI. They allow for swaths of datato be distributed from a root process to all other availableprocesses, or data from all processes can be collected at oneprocess. These operators can eliminate the need for a surprisingamount of boilerplate code via the use of two functions:

MPI_Scatter:

MPI_Gather:

In order to get a better grasp on these functions, let’s go ahead andcreate a program that will utilize the scatter function. Note that thegather function (not shown in the example) works similarly, and isessentially the converse of the scatter function. Further exampleswhich utilize the gather function can be found in the MPI tutorialslisted as resources at the beginning of this document.

Example¶

We will create a program that scatters one element of a data array toeach process. Specifically, this code will scatter the four elementsof an array to four different processes. We will start with a basicC++ main function along with variables to store process rank andnumber of processes.

Now let’s setup the MPI environment using MPI_Init , MPI_Comm_size, MPI_Comm_rank , and

MPI_Finaize:

Next let’s generate an array named distro_Array to store fournumbers. We will also create a variable called scattered_Data thatwe shall scatter the data to.

Now we will begin the use of group operators. We will use the operatorscatter to distribute distro_Array into scattered_Data . Let’stake a look at the parameters we will use in this function:

Let’s see this implemented in code. We will also write a printstatement following the scatter call:

Running this code will print out the four numbers in the distro arrayas four separate numbers each from different processors (note theorder of ranks isn’t necessarily sequential):